Match Picker

Overview

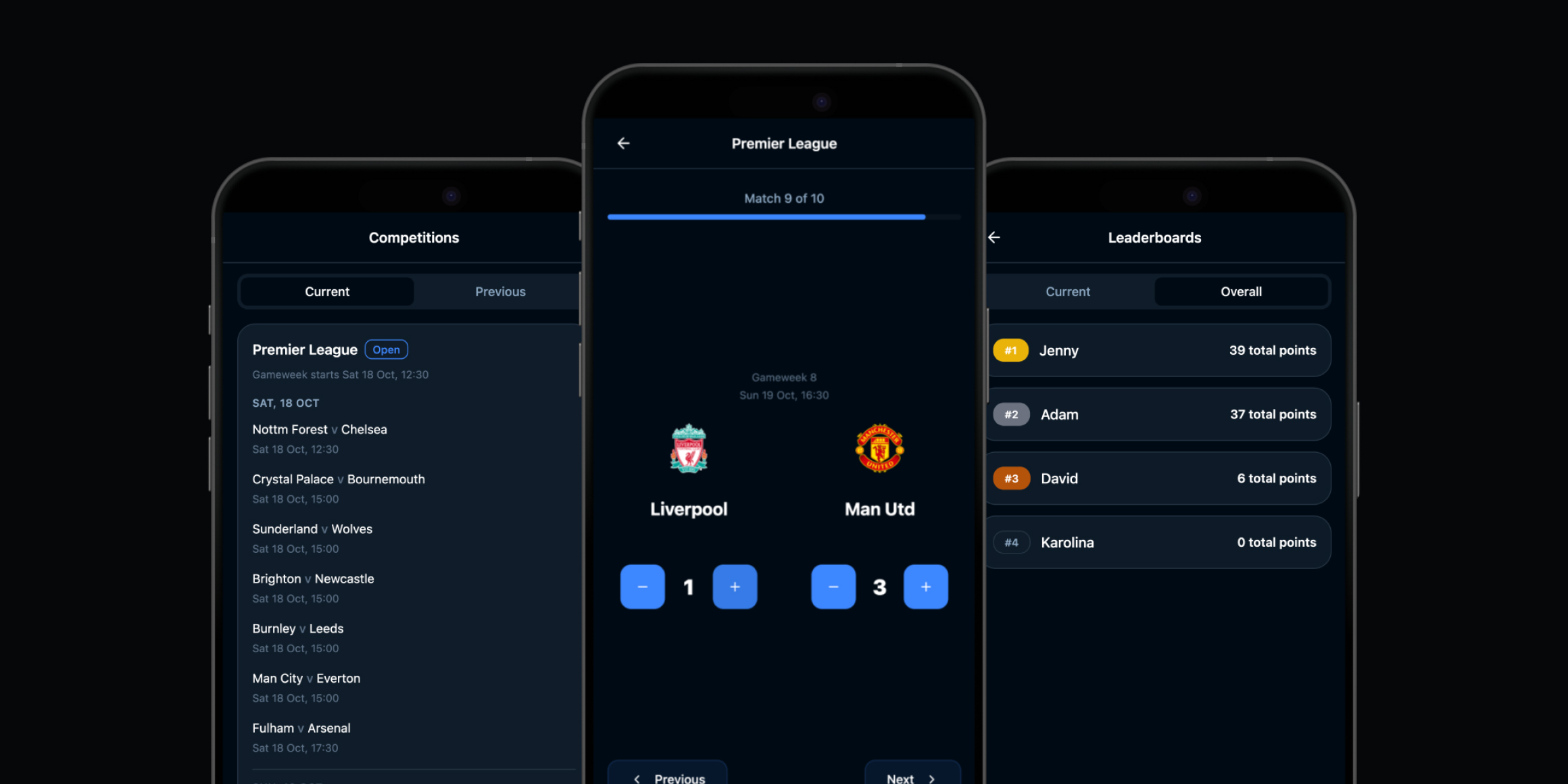

Match Picker started as a small personal project: a football prediction app for friends that quickly evolved into a deeper exploration of how AI-assisted coding can help designers turn ideas into real, working products.

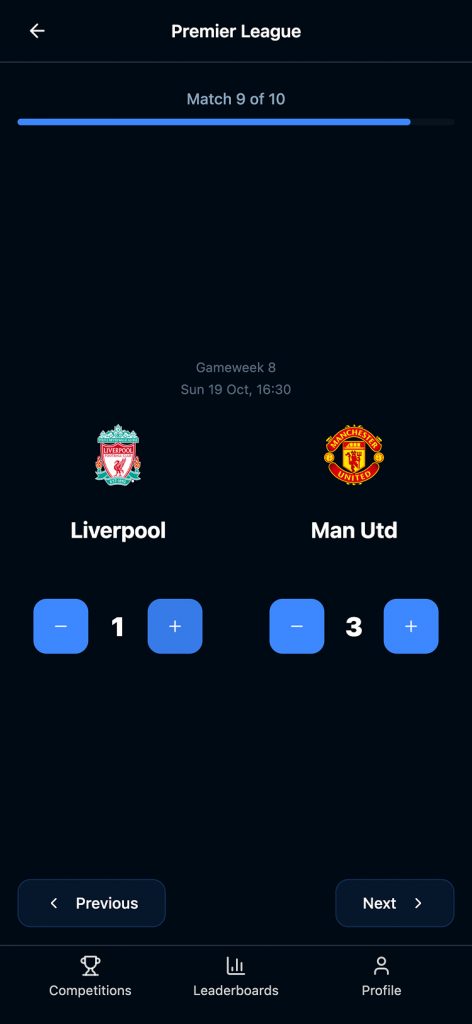

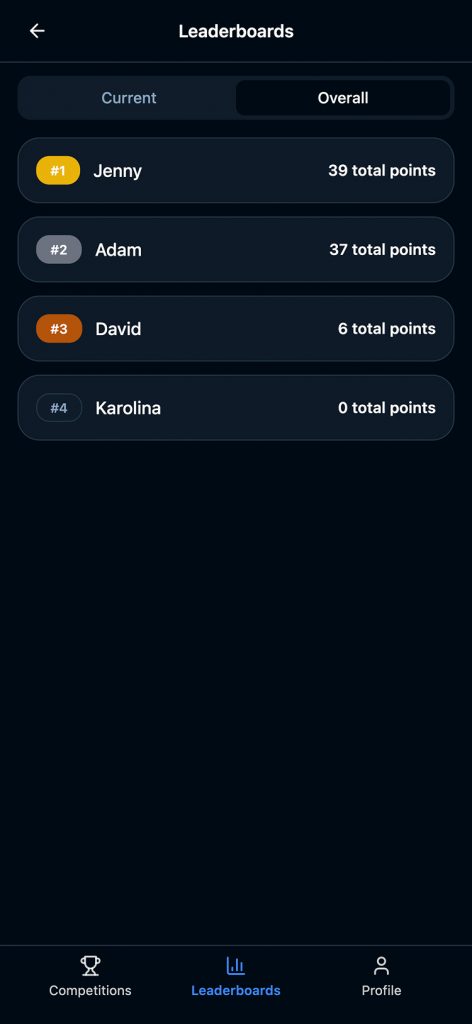

It’s a simple concept where users make predictions on upcoming Premier League fixtures, and the app automatically updates results and leaderboards. Beneath that simplicity, it became a hands-on experiment in learning through building, combining AI-generated code, live data integration, and automation to produce a functional MVP.

Goal

The goal was to learn by doing and explore how AI can support a designer through the full product lifecycle, from concept to code.

With some background knowledge of coding and structure from my early working days, I wanted to see how far that foundation could be extended through modern AI tools. The aim wasn’t perfection; it was understanding how to collaborate with AI, structure logic, and make something real that could evolve over time.

Approach

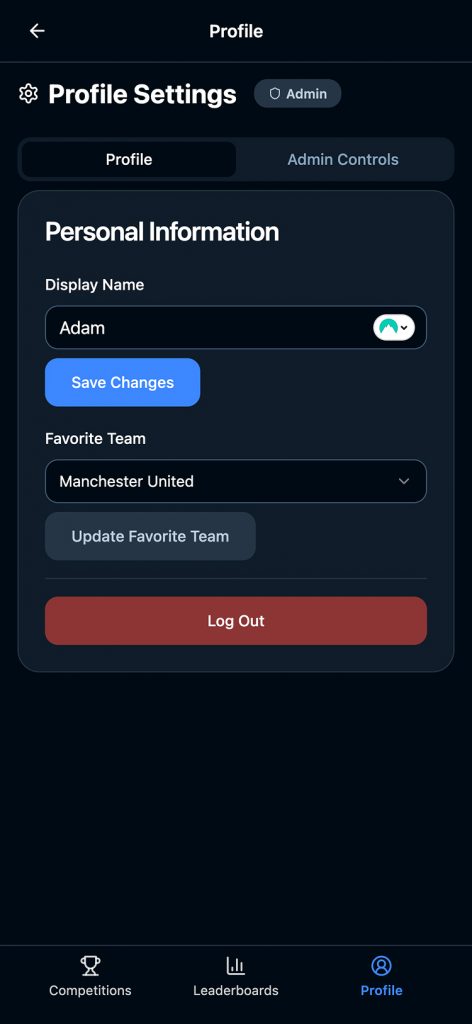

I began in Cursor, using AI to scaffold React components and set up the application’s basic flow. Once the foundations were in place, I moved to Lovable, which made it easier to iterate, test, and host everything in one environment. Supabase powered authentication and data storage, while the app also connected to a live football API to pull fixture and result data dynamically.

This setup allowed Match Picker to become fully automated. It fetches live fixtures, opens and closes predictions by gameweek, and updates results automatically once matches finish.

The project was my first full dive into Vibe coding, where development happens conversationally with AI. It was fast and exciting to get the first version running, but once the basics worked, most of the learning came through debugging, testing, and refining.

I also set up a design system partway through the build. In hindsight, it should have been done at the start. Establishing a structure early would have made the codebase more consistent and easier to scale, reinforcing how traditional design thinking still applies even when working directly in code.

Outcome

Match Picker is now a fully working MVP, used by a small group of friends to predict and track football results each week.

It automatically connects to a live API to pull match data, stores user predictions and results in Supabase, and manages gameweeks and scoring logic without any manual updates.

Beyond the app itself, the process taught me how to work productively within AI-assisted development. It was about finding the balance between speed and structure, and learning when to trust the AI’s output versus when to step in and fix things manually.

It reinforced that AI isn’t a shortcut; it’s a multiplier for learning and experimentation, especially when paired with a solid understanding of design principles and code structure.

Learnings

This project was full of lessons, both technical and creative.

- AI accelerates creation but not completion. Getting the first version running came quickly, but stability, edge cases, and real-world logic all required hands-on debugging.

- Plan your structure early. Setting up the design system and data structure at the start would have reduced friction later.

- AI needs direction. The clearer and more specific the prompts, the better the results. Vague requests often created more rework.

- Debugging is the real teacher. Fixing bugs taught me more about component logic, data flow, and state management than any tutorial could.

Match Picker helped me understand not only how to use AI to build, but how to collaborate with it effectively, shaping its output through design intent and problem-solving.